Robotic surgery has reduced surgeon fatigue and shortened patient recovery times, but automating complex tasks like suturing—the process of stitching up an incision—remains challenging because it requires both adapting to variations in tissue and achieving precise, consistent movements.

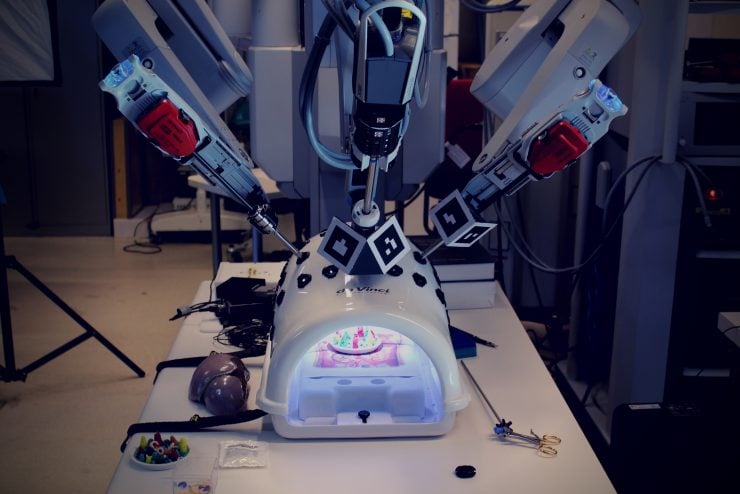

As part of an effort to enable surgical robots to perform such delicate tasks, a team of Johns Hopkins researchers developed a new simulation platform called SurgicAI and used it to train the da Vinci robotic surgical system to suture by breaking the process down into smaller, more manageable skills. The team presented its work at the Thirty-Eighth Annual Conference on Neural Information Processing Systems, held in December in Vancouver, Canada.

“Our goal was to enable the da Vinci and other robotic systems to perform these sorts of complex surgical tasks with minimal reliance on prior domain knowledge or handcrafted trajectory planning,” says senior author Anqi “Angie” Liu, an assistant professor in the Whiting School’s Department of Computer Science.

Building on the simulation provided in the AccelNet Surgical Robotics Challenge, which includes realistic models of surgical scenarios and medical instruments, the Hopkins team divided the process of suturing into subtasks like grasping a needle, inserting it into the skin, and pulling it back out.

The researchers then used reinforcement learning—a machine learning trial-and-error technique that encourages a system to make decisions that achieve the best results—to train specialized modules within their AI system on each subtask, while using a coordinating program to determine the sequence and timing of these subtasks. This hierarchical structure not only improves learning efficiency, but also enhances the robustness and adaptability of the system, the researchers say.

“We also established a pipeline for collecting and preprocessing multimodal expert trajectories—for example, videos of real-life surgeons—for imitation learning,” says Jin Wu, a robotics graduate student on the research team. “By combining reinforcement and imitation learning (RL/IL), the robot learns both through interactions with the simulation and from expert guidance.”

The researchers wanted to contribute to the broader research community by fostering collaboration and innovation in surgical robotics, so they constructed a user-friendly interface for SurgicAI—complete with comprehensive instructions and demo videos—so that other users can easily define new tasks and implement their own custom algorithms. The platform also provides valuable baselines and metrics that other researchers can use to compare and improve their methods.

“A critical challenge for us in the beginning was the lack of a standard benchmark for surgical policy performance,” explains co-author Adnan Munawar, an assistant research scientist in the Laboratory for Computational Sensing and Robotics. “Our contribution addresses this gap by not only providing a comprehensive learning environment for developing and testing robotic suturing techniques, but also by defining evaluation metrics that researchers can use to train and improve RL/IL methods. By sharing our platform, benchmarks, and evaluation metrics, we hope to establish a common ground for researchers to develop and improve their algorithms.”

Currently, the researchers have only validated their experiments in simulation, but their next steps include evaluating how their framework performs with actual robots. They also plan to integrate vision-language models to improve high-level policy decision-making, enhance the system’s robustness against more complex and unexpected scenarios, and explore reinforcement learning techniques with safety constraints to avoid potentially harmful actions.

In the meantime, the team encourages open-source community testing and looks forward to collaborating with the broader research community to further improve SurgicAI.

“Our work brings us closer to equipping medical robots with the intelligence needed to assist in complex surgical tasks, significantly reducing surgeons’ workload and enhancing their surgical efficiency,” Liu says. “This approach not only benefits medical robotics, but could also potentially extend to all types of robots that need to be able to dexterously manipulate the environment around them.”

Additional authors of this work include Peter Kazanzides, a research professor of computer science, and Haoying “Jack” Zhou, an LCSR visiting graduate scholar from the Worcester Polytechnic Institute.

This work was supported by the National Science Foundation and by Liu’s Johns Hopkins Discovery and Amazon Research Awards, as well as a seed grant from the Institute of Assured Autonomy.

This article originally appeared on the Department of Computer Science website >>