Yinzhi Cao, Johns Hopkins Whiting School of Engineering

Philippe Burlina, Johns Hopkins Applied Physics Laboratory

Current AI frameworks using deep learning (DL) have met or exceeded human capabilities for tasks such as classifying images and recognizing facial expressions or objects. The performance of medical AI technology is also approaching the level of performance of human clinicians in diagnostic tasks. This success masks real issues in AI assurance that will impede the deployment of these machine-learning algorithms in practice.

Two of the most critical concerns impeding AI assurance are privacy and fairness, such as compliance with the Health Insurance Portability and Accountability Act (HIPAA). Additionally, DL system performance strongly depends on the availability of large, diverse, and representative training data sets, which often suffer from diagnostic bias in factors such as gender, ethnicity, and/or disease type.

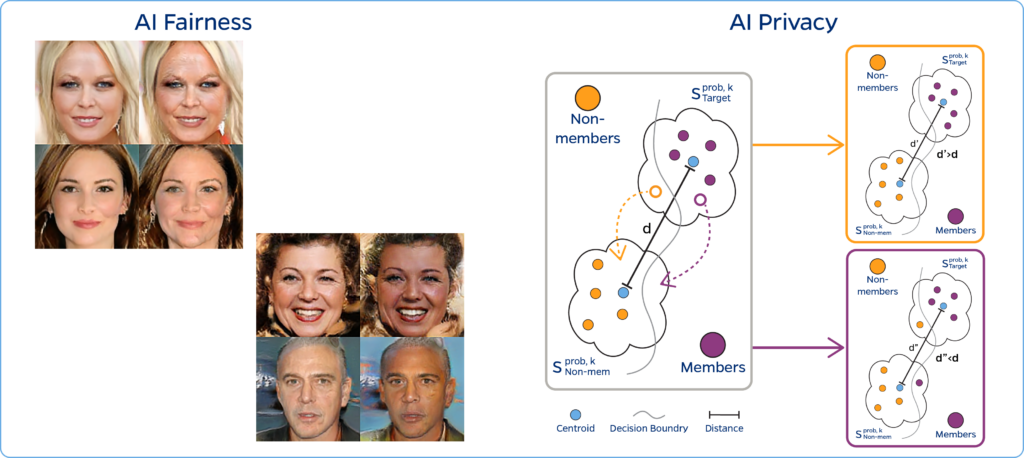

This project aims to develop algorithms that assure AI fairness and AI privacy. Researchers are using methods extending generative adversarial networks and two-player adversarial methods to create representative medical images to improve diagnoses for underrepresented populations. They are also developing methods to protect privacy by removing sensitive information from data sets while maintaining data fidelity.

A Javascript demo is available showing how image features can change across different dimensions.

This research is addressing fairness and privacy by developing deep learning generative methods that generate or alter images with respect to specific attributes, while keeping other attributes unchanged.