Mark Dredze, Johns Hopkins Whiting School of Engineering

Anna Buczak, Johns Hopkins Applied Physics Laboratory

AI systems use extremely complex models that make it difficult to understand the reasoning behind the system’s actions, predictions, or classifications. Many people are reluctant to use AI systems in important settings when those systems cannot explain their decisions. On the other hand, AI systems that are interpretable by humans, or can explain their decisions, engender trust in the technology and increase usability.

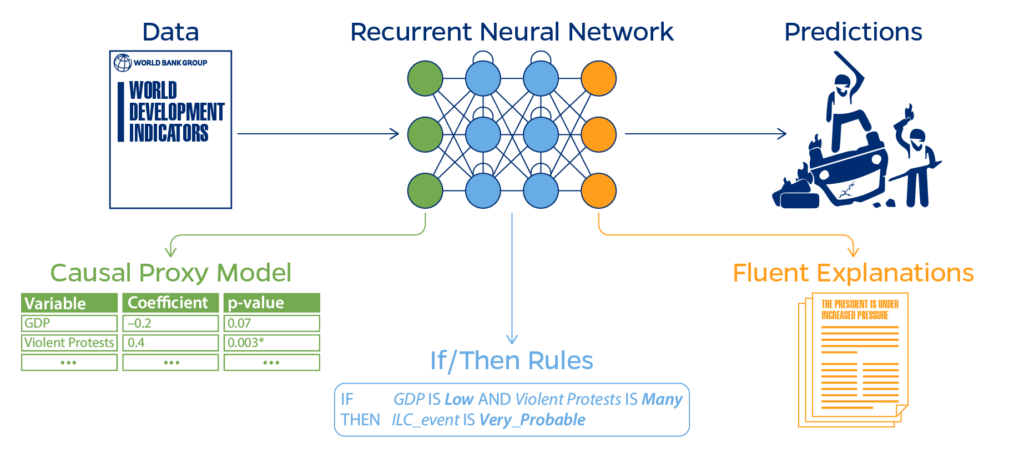

The goal of this research is to develop new methods for explaining AI behavior, exploring three in particular: (1) inferring causal connections between system inputs and decisions, (2) generating explainable rules that reflect system decisions, and (3) selecting text summaries that justify model predictions.

With method 1, a causal graph will be developed that forms connections between inputs and outputs, training the causal model on a recurrent neural network to test the ability to produce causal statements from complex sequential models. With method 2, SHapley Additive exPlanations (SHAP), which can explain individual predictions, will be combined with Fuzzy Associative Rule Mining (FARM) to derive rules for how features contribute to AI system behavior. With method 3, a setting where a large text corpus contains explanations that support system behavior is considered, aligning text to specific system predictions to provide decision justifications.

The proposed work promises to significantly advance the field of explainable AI systems, ultimately engendering trust in the technology.

Irregular Leadership Change forecasts can be found here: http://iaa-ccube-dmz.outer.jhuapl.edu/