Yair Amir, Johns Hopkins Whiting School of Engineering

Tamim Sookoor, Johns Hopkins Applied Physics Laboratory

In recent years, reinforcement learning (RL) algorithms have brought dramatic improvements to diverse tasks: defeating world champions in games such as Go and StarCraft, controlling robots, managing industrial control systems, optimizing supply chains, calibrating machines, and personalizing marketing and ad-recommender systems. But one of their main limitations is their opaque failure modes: it is difficult to understand how these systems work or to predict when and how they will fail in a given scenario, other than by running the system.

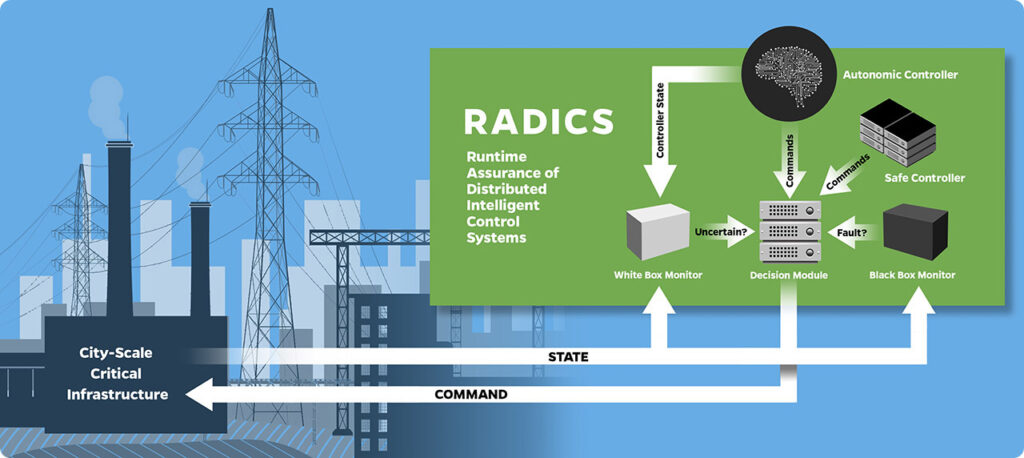

For such autonomous systems to be assured, two key issues must be addressed: (1) fault tolerance and (2) controller competence. For this research, two realistic city applications will be developed: an electrical smart grid and an intelligent traffic control system. The goal is to assure the safety of RL-controlled critical infrastructure systems.

This research explores the issues of fault tolerance and controller competence by producing (1) an easy-to-understand black-box monitor that avoids system failures by detecting possible breach of the system’s correctness invariants, (2) a white-box monitor that estimates the competence of the autonomous control algorithm, and (3) a decision module to predict decisions that could result in the system entering a high-risk state. The goal? To assure safety by combining information from a Simplex-based system monitor with an RL competence estimator.